- Web hosting

- Services

- Help

Dedicated server

Rent a dedicated server based

on Intel and AMD processors

in Germany

Dedicated server

Rent a dedicated server based on Intel processors in Germany

Core i5-13500 1.8-4.8GHz (14 cores)

RAM

64Gb RAM

HDD

2x512Gb NVMe (NRB)

Setup fee 69 €

69 €

per month

AMD Ryzen 7 7700X 3.8-5.3GHz (8 cores)

RAM

64Gb RAM

HDD

2x1000Gb NVMe (NRB)

Setup fee 69 €

109 €

per month

Core i9-13900 1.5-5.6GHz (24 cores)

RAM

64Gb RAM

HDD

2x2000Gb NVMe (NRB)

Setup fee 69 €

149 €

per month

AMD Ryzen 9 7950X3D 4.2-5.7GHz (16 cores)

RAM

128Gb RAM

HDD

2x2000Gb NVMe (NRB)

Setup fee 69 €

189 €

per month

2 x Xeon Silver-4214 2.2-3.2GHz (24 cores)

RAM

256Gb RAM

HDD

2x4000Gb NVMe (NRB)

Setup fee 299 €

499 €

per month

2 x Xeon Gold-6338 2.0-3.2GHz (64 cores)

RAM

512Gb RAM

HDD

4x8000Gb NVMe (NRB)

Setup fee 299 €

999 €

per month

2 x AMD Epyc 7663 2.0-3.5GHz (112 cores)

RAM

512Gb RAM

HDD

4x8000Gb NVMe (NRB)

Setup fee 299 €

1499 €

per month

2 x AMD Epyc 7763 2.45-3.5GHz (128 cores)

RAM

1024Gb RAM

HDD

4x15360Gb NVMe (NRB)

Setup fee 299 €

1999 €

per month

Variety of upgrade options available for bare-metal servers

Variety of upgrade options available

Reliable technology and modern equipment

We offer proven solutions based on Intel Xeon E3, E5, Intel Core

and adopt new products:

Xeon E and AMD Ryzen

Server

DDoS protection

DDoS protection

Optional DDoS protection available. We offer 95% guarantee against

network-level DDoS attacks.

Expert technical support

Technical support team is available 24/7, free of charge. We offer

fully managed bare-metal dedicated servers.

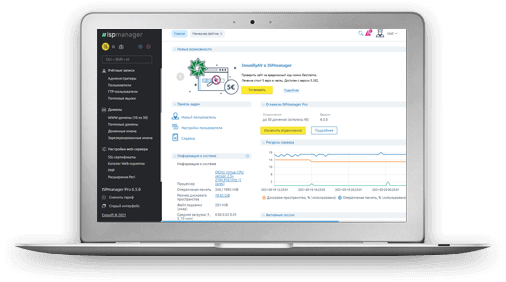

ispmanager Control Panel

Enjoy your dedicated server experience with the ispmanager control panel, even if you’re a beginner.

No command line knowledge required

Everything you need is available straight from the browser window: user and

domain management, mail setup, backups creation, file upload, DNS, FTP, PHP,

Databases and much more.

Learn more

about the control panel

Install popular CMS in just 3 clicks

Install Wordpress, Joomla, Drupal and more in just 3 clicks, right from the control panel, and start creating a website.

Fully managed servers

Our experienced engineers will make sure your hosting service is at its top performance. We’ll tailor the server to your needs and install any mandatory software. You needn’t worry if you had no prior experience maintaining a website, we’ll do everything necessary for you, free of charge.

Server Configuration

We’ll optimally prepare the server and configure the web environment.

Migration assistance

We’ll transfer your data from another hosting provider and deploy the backups if necessary.

Troubleshooting

In case something stops working we’ll find out why and fix it. We’ll walk you through the steps for the same to not occur again, and offer our full assistance setting up additional software you may need. If you need customized software, we will provide you detailed installation instructions, or install it for you.

Software Installation

We'll install an operating system, additional server software and a CMS for you. Get in touch with the technical support to arrange the time.

Security Monitoring

We support our customers’ needs to secure their data and protect their websites from intruders. Therefore, we help by setting up protection against DDoS attacks and switch to HTTPS protocol, by installing an SSL certificate, for data encryption.

70% of support requests are solved within 15 minutes

We reply around the clock, 7 days a week

99,9% Uptime Guaranteed

Our hosting services are rendered strictly in accordance

to the Terms of Service.

We guarantee a refund set by the SLA.

Order dedicated server

Or choose a ready server from the list above or configure your own.

Click “Order” and go to the client area for registration and purchase.

Once the service is paid for, access details will be emailed to you.